Semantic Mapping for Navigation

Real-Time Metric-Semantic Mapping for Autonomous Navigation in Outdoor Environments

Author: Jianhao Jiao, Ruoyu Geng, Yuanhang Li, Ren Xin, Bowen Yang, Jin Wu, Dimitrios Kanoulas, Rui Fan, etc.

Acknowledgement: This is a joint research work under the support from HKUST, HKUST(GZ), Tongji University, and University College London.

Please click the text for the open-source Code and Dataset AvA.

Demo

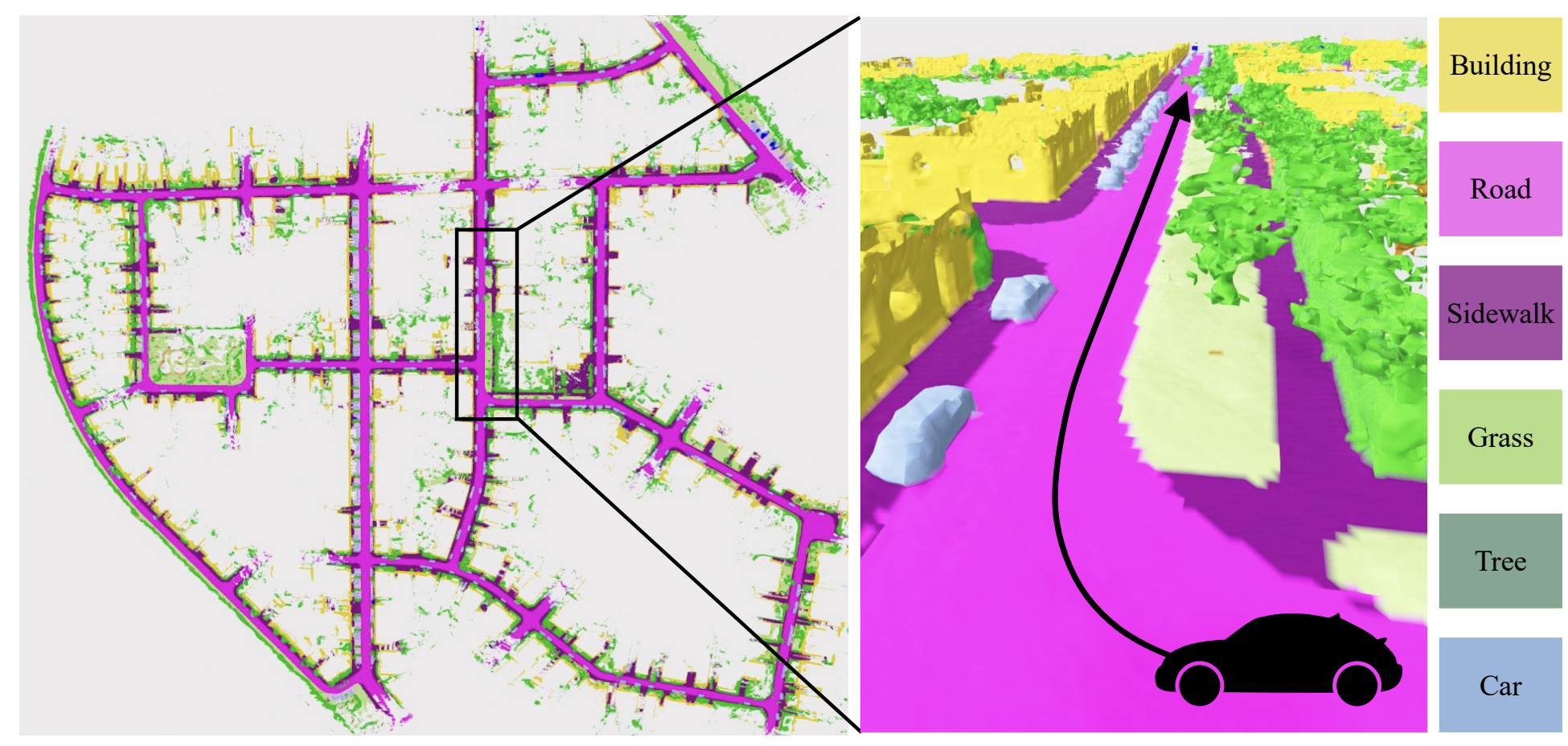

The semantic mapping process.

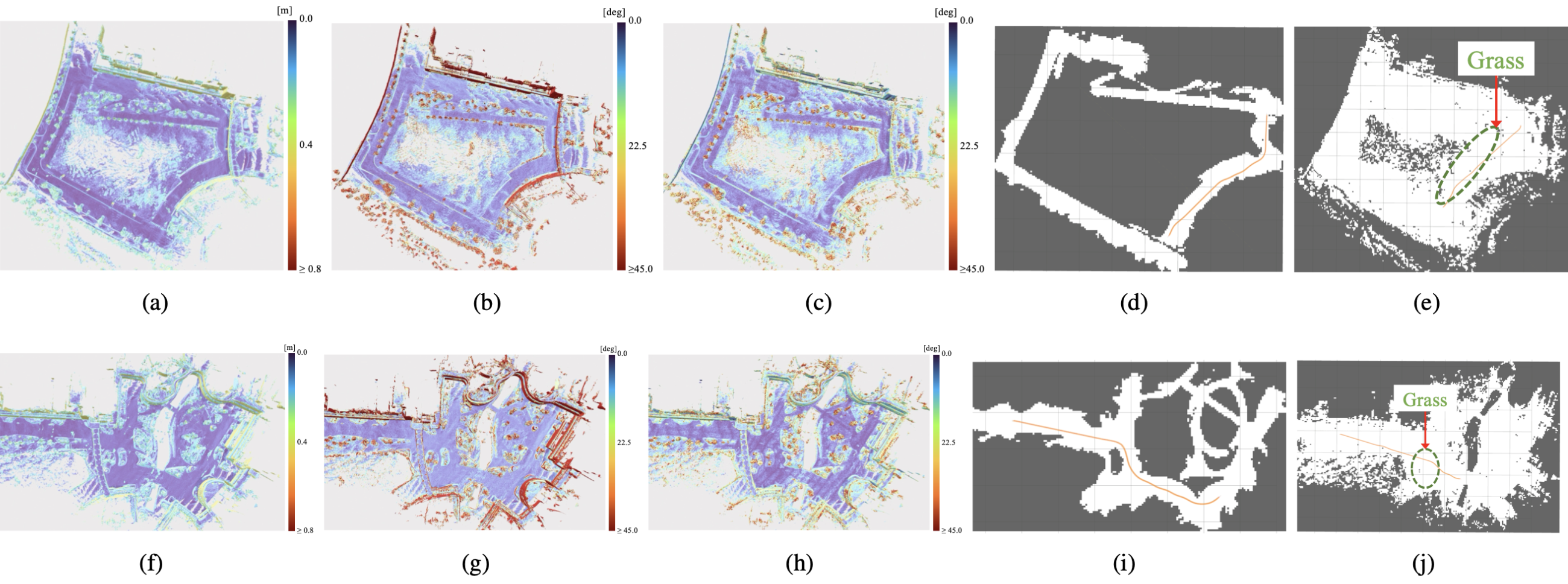

Traversability estimation and motion planning on the resulting occupancy map.

Background

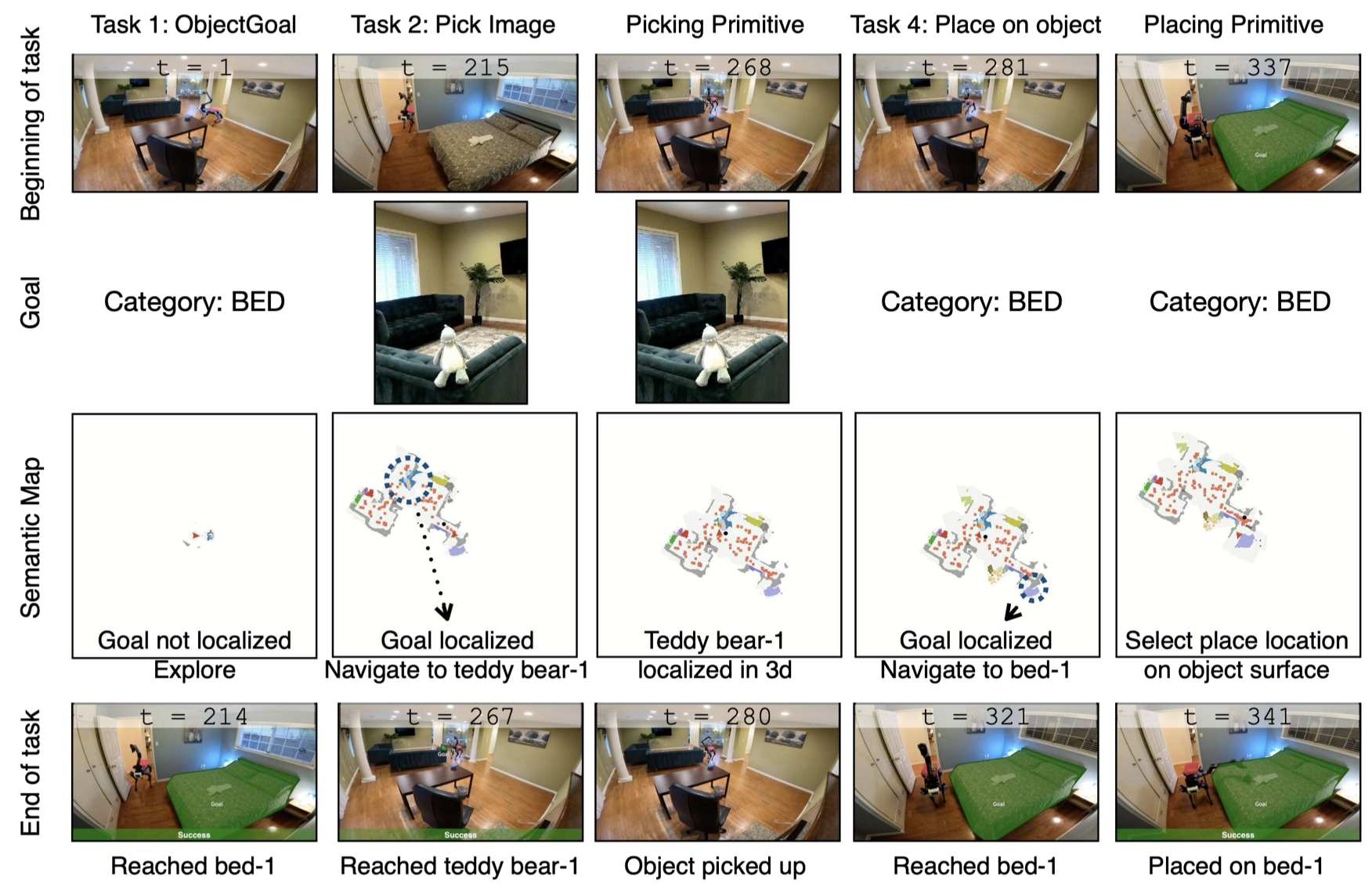

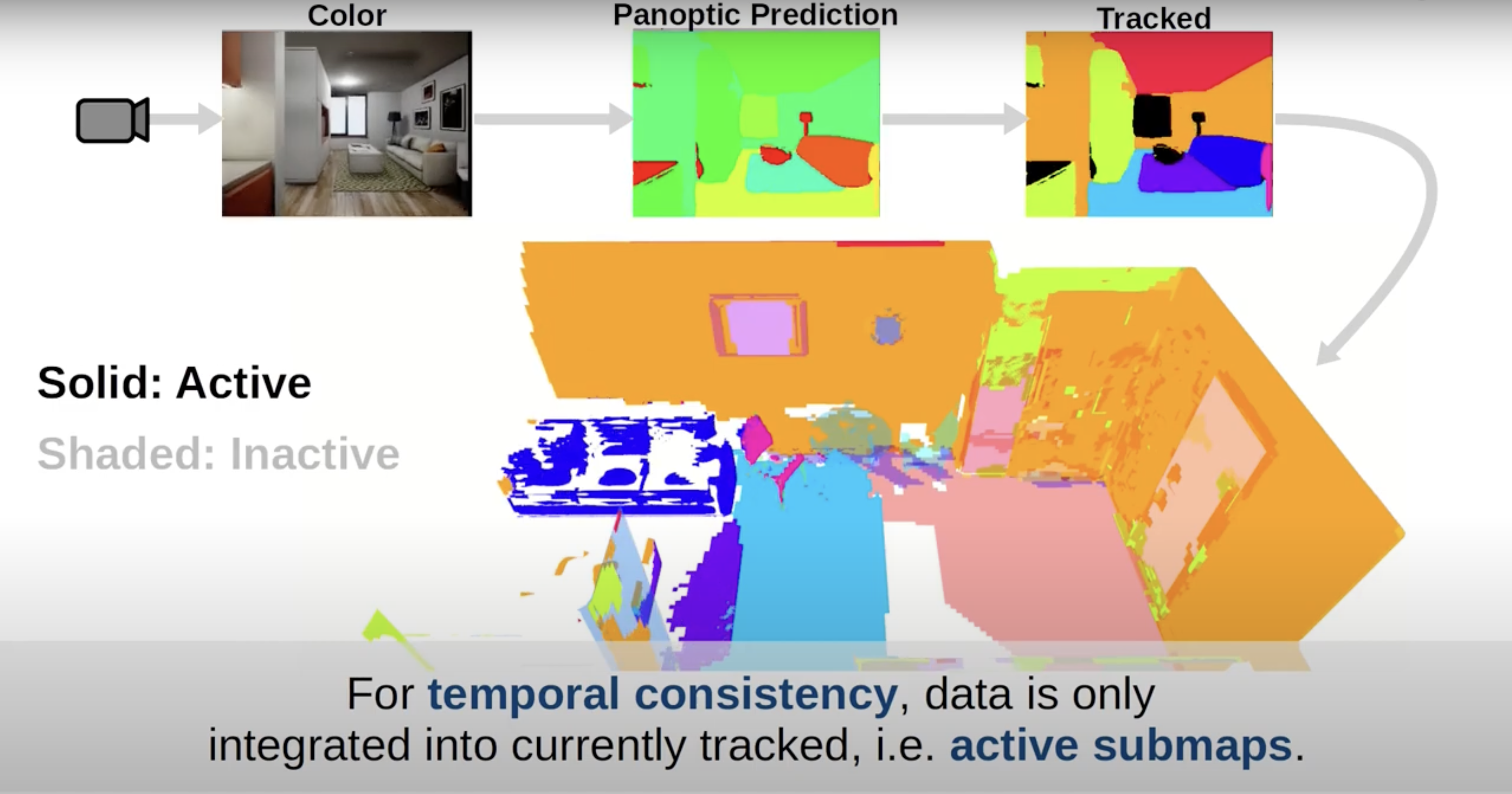

Mapping is the process of establishing an internal representation of environments. Metric-semantic maps, which include human-readable information (i.e., where map elements are labeled), offer a more profound understanding of environments. This contrasts with pure metric maps, which primarily store the geometric structure of a scene. These are typically defined by the positions of landmarks (known as a point cloud map), distances to obstacles (referred to as a distance field), or binary values that represent free and occupied spaces (occupancy map). Recent works (shwon below) in scene abstraction (e.g., Hydra), long-term map update (e.g., Panoptic Mapping), exploration (e.g., Semantic Exploration, and grasping (e.g., GOAT) have demonstrated the potential of semantic maps, boosting the development of embodied intelligence.

Taking the KITTI scenario as an example. Many objects such as trees and buildings appear. The street is also composed of the sidewalk that is specifically designed for pedestrains. By incorporating semantics, the map enables the vehicle to navigate along the road, finding a path that is free of collisions and avoids intersecting with sidewalks and grasslands. In contrast, geometry-based traversability extraction methods often face challenges in distinguishing between roads, sidewalks, and grass due to their similar structures. This paper focuses on the point-goal navigation task of ground robots in complicated unstructured environments (e.g., campus, off-road scenarios), where abundant semantic elements should be recognized to guarantee safe and highly-interactive navigation.

Methodology

The overview of the method is shown as below. Please refer to the preprint for more technical details.

Metric-Semantic Mapping

Building a semantic map for large-scale outdoor environments costs much time. Therefore, in this work, we propose an real-time metric-semantic mapping system which leverages LiDAR-visual-inertial sensing to estimate the real-time state of the robot and construct a lighweight and global metric-semantic mesh map of the environment. We build upon the work of NvBlox and thus utilize a signed distance field (SDF)-based representation. This representation offers the advantage of constructing surfaces with sub-voxel resolution, enhancing the accuracy of the map. While the focus of this paper is on mapping outdoor environments, the proposed solution is both extensible and easily adaptable for above applications. We publicly release our code and datasets in Cobra. The mapping system consists of four primary components:

- State Estimator modifies the R3LIVE system (an Extended Kalman Filter-based LiDAR-visual-inertial odometry) to estimate real-time sensors’ poses with a local and sparse color point cloud.

- Semantic Segmentation is a pre-trained convolutional neural network (CNN) that assigns a class label to every single pixel of each input image. A novel dataset that categorizes objects into diverse classes for the network training is also developed.

- Metric-Semantic Mapping takes sensors’ measurements and poses as input, and constructs a 3D global mesh of environments using the TSDF-based volumetric representation with 2D semantic segmentation. The mapping is implemented in parallel with the GPU and thus achieves the real-time performance (<7ms per LiDAR frame). We also propose a non-projective distance under the TSDF formulation, leading to a more accurate and complete surface reconstruction.

- Traversability Analysis identifies drivable areas by analyzing the geometric and semantic attributes of the resulting mesh map, thus narrowing the search space for subsequent motion planning. For example, we can classify ‘‘road’’ regions as drivable for vehicles, while ‘‘sidewalk’’ or ‘‘grass’’ regions are not.

Navigation System Integration

We also integrate the resuting map into a navigation system for a real-world autonomous vehicle. The semantic data encoded in the map translates human instructions, thereby enabling robots to navigate safely within unstructured environments. Implementation details are summarized:

- Prior Map-based Localization: We use PALoc to obtain the real-time global pose of the vehicle by registering the map of the current scan.

- Map Process and Motion Planning: We transform the vertices of the traversable map into a 2D occupancy grid, where cells with zero occupancy probability are considered drivable. And then we employ a hybrid A star algorithm for motion planning.

- Path Following: Waypoints are taken as input of the further path following module with the lateral trajectory tracking controller (for steering adjustments) and a longitudinal speed controller (for speed changes).

Experimental Results

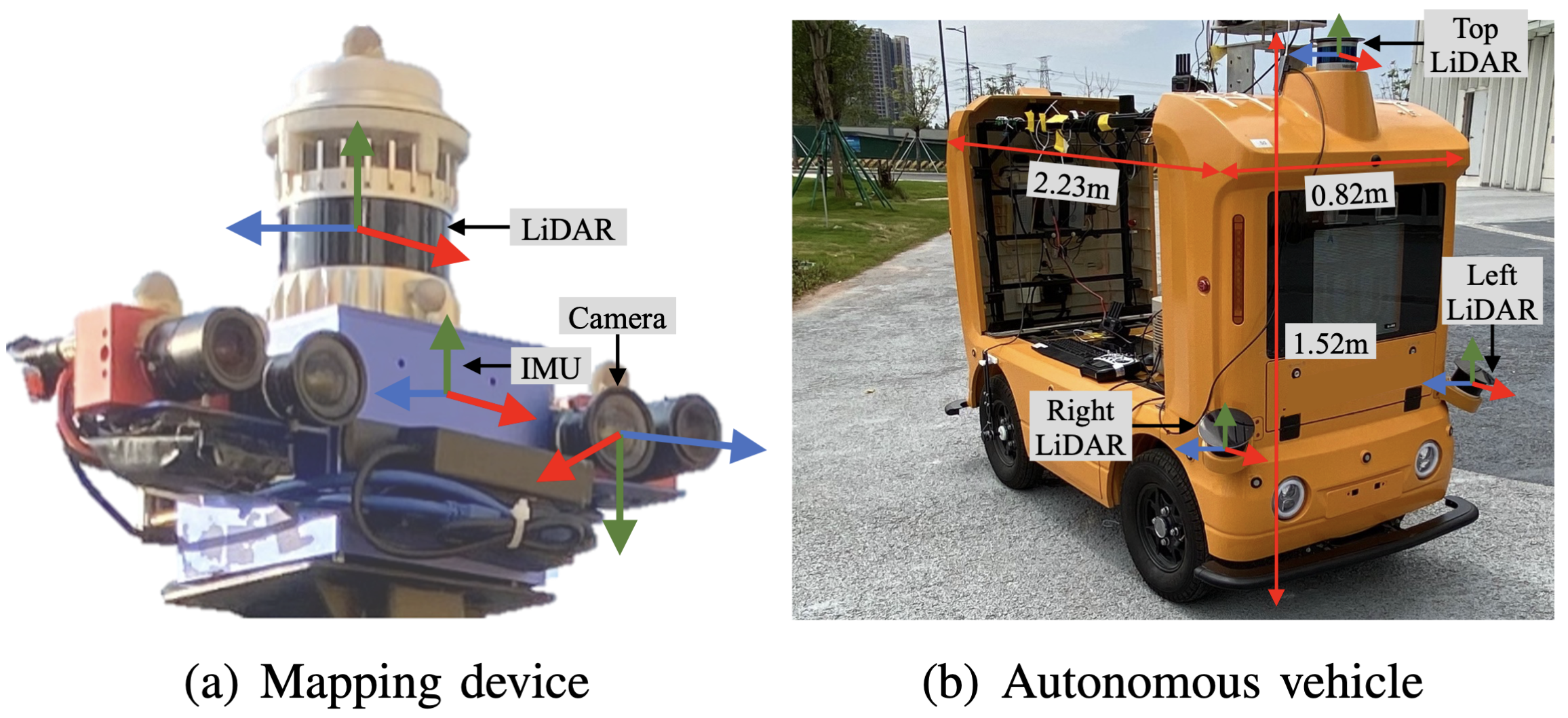

Real-World Experimental Platform

Real-world experimental platform.

Some Mapping Results

<img src="/assets/img/2024_tase_mapping/mapping_semantickitti.gif" width=70%" />

Test on SemanticKITTI

Test on FusionPortable

Real-World Navigation Results

Point-goal navigation test

Other point-goal navigation tests

More Results

To demonstrate the effects of concerning the impact of measurement noise, varying view angles, and limited observations on mapping, we have conducted a series of supplementary experiments using the MaiCity dataset sequence 01, as compared with baseline methods.

Citation

Please cite our paper if you find the code and dataset useful to your research:

@article{jiao2024real,

title={Real-Time Metric-Semantic Mapping for Autonomous Navigation in Outdoor Environments},

author={Jiao, Jianhao and Geng, Ruoyu and Li, Yuanhang and Xin, Ren and Yang, Bowen and Wu, Jin and Wang, Lujia and Liu, Ming and Fan, Rui and Kanoulas, Dimitrios},

journal={IEEE Transactions on Automation Science and Engineering},

year={2024},

publisher={IEEE}

}

Dataset:

@inproceedings{jiao2022fusionportable,

title={FusionPortable: A Multi-Sensor Campus-Scene Dataset for Evaluation of Localization and Mapping Accuracy on Diverse Platforms},

author={Jiao, Jianhao and Wei, Hexiang and Hu, Tianshuai and Hu, Xiangcheng and Zhu, Yilong and He, Zhijian and Wu, Jin and Yu, Jingwen and Xie, Xupeng and Huang, Huaiyang and others},

booktitle={2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={3851--3856},

year={2022},

organization={IEEE}

}