Jianhao Jiao

China, 2023

Position: I am currently a senior research fellow jointly at The Hong Kong Polytechnic University (PolyU), Department of Aeronautical and Aviation Engineering, and University College London (UCL), Department of Computer Science. I work closely with Prof. Weisong Wen in the Trustworthy AI and Autonomous Systems Lab at PolyU, and Prof. Dimitrios Kanoulas in the Robot Perception and Learning Lab at UCL.

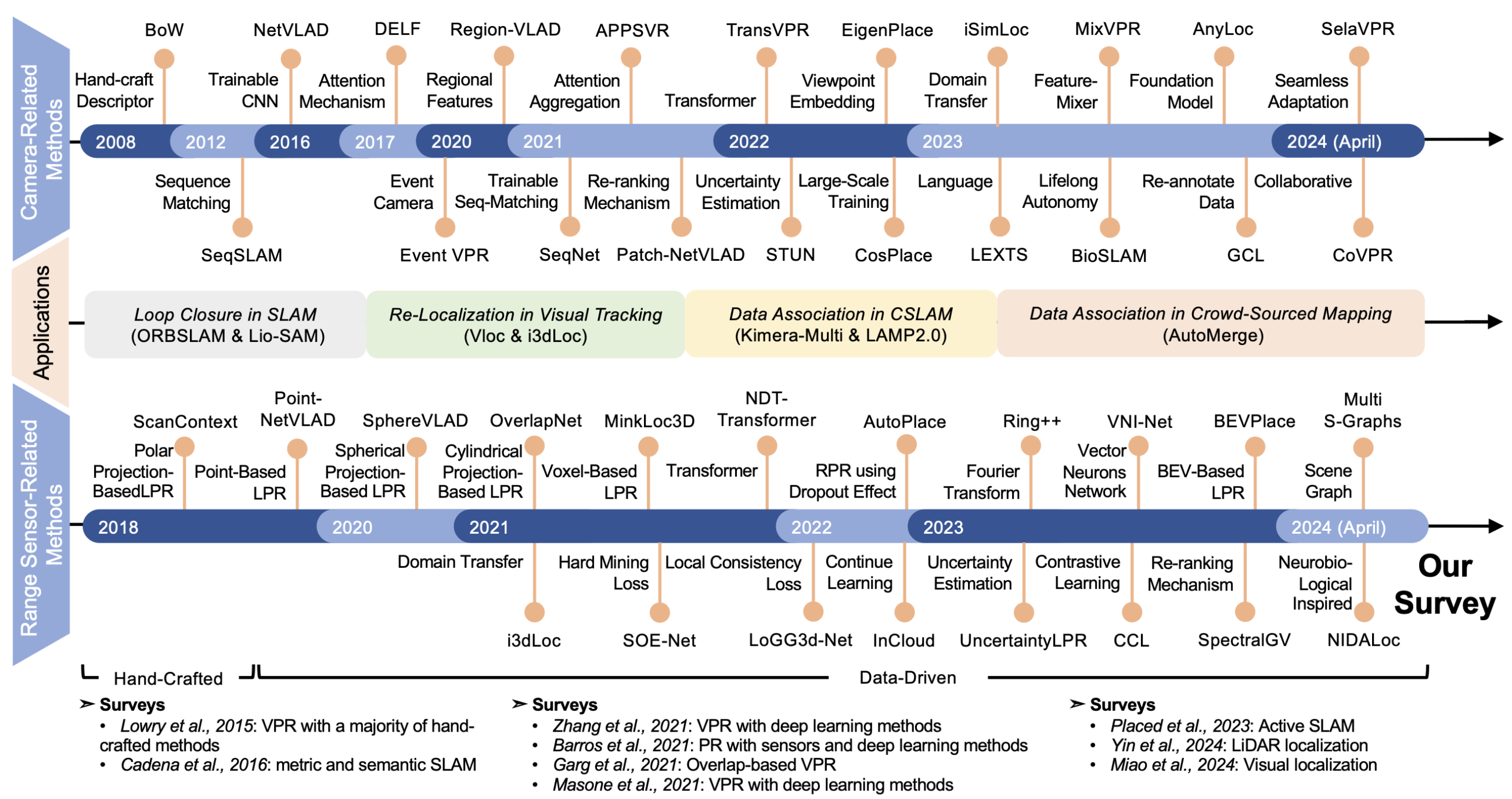

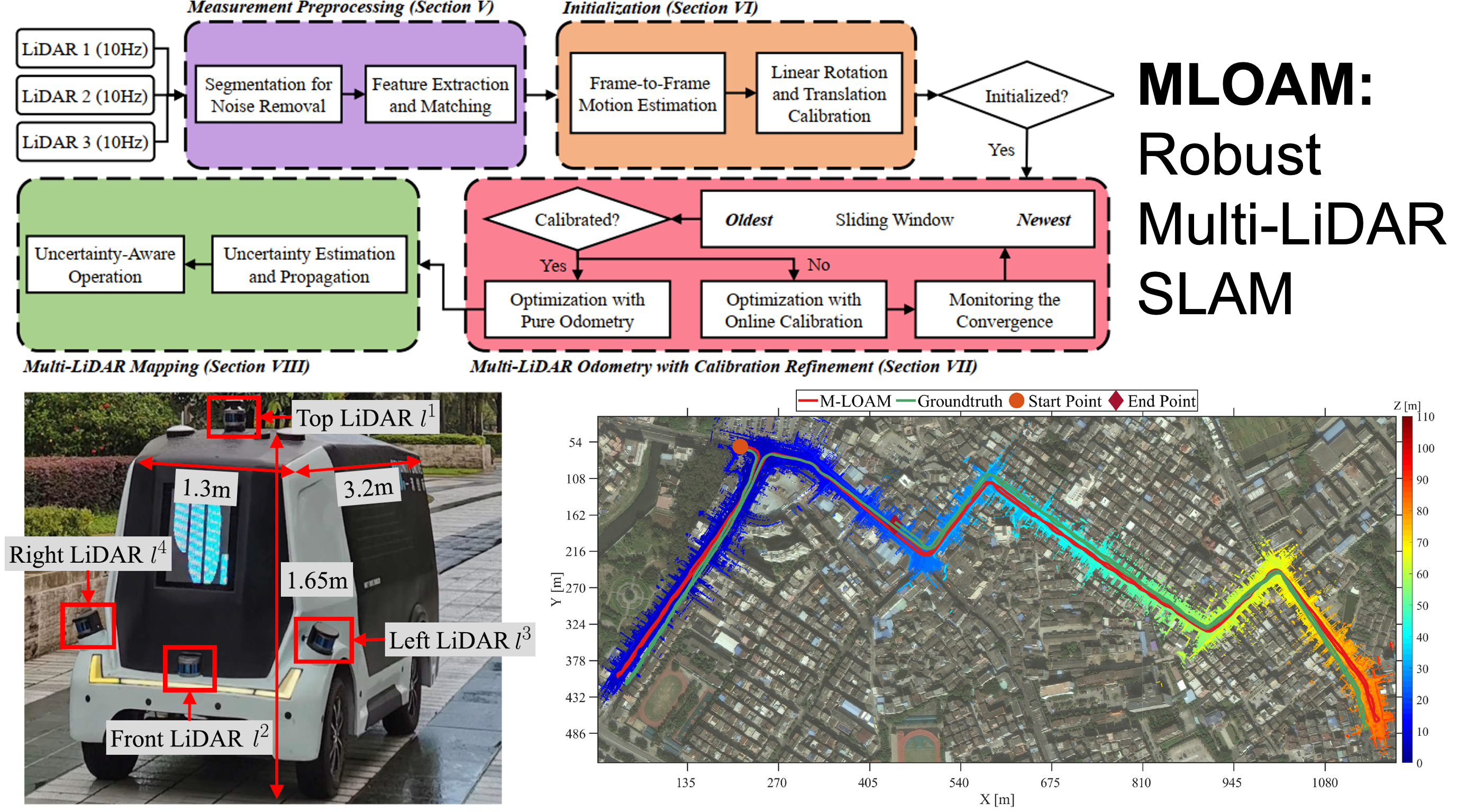

Brief Research Statement: My research in autonomous navigation and embodied intelligence includes notable contributions such as M-LOAM, FusionPortable, and the scalable, structure-free visual navigation system, OpenNavMap. My long-term research aims to develop lifelong, cognitive spatial memory mechanisms for autonomous systems. This work centers on achieving sustainable autonomy and dynamic map maintenance capable of scaling to rapidly increasing spatio-temporal requirements in the most challenging, dynamic, and unstructured worlds. This foundational capability is critical for applications, including logistics, infrastructure inspection, and high-stakes rescue missions in domains such as mines and forests.

Supervision: I have had the opportunity to supervise over 15 students, who have pursued positions at leading academic institutions (Imperial College London, University of Toronto, UCL, University of Science and Technology Beijing, Shenzhen University, and HKUSTGZ, etc.), as well as renowned industry leaders (Huawei, DJI, Zhuoyu Technology, RobotScience, and Agibot, etc).

I received my Ph.D. in Robotics in 2021 from The Hong Kong University of Science and Technology (HKUST). I was fortunate to collaborate with some excellent researchers including Prof.Rui Fan, Dr.Lei Tai, Dr.Haoyang Ye, Dr.Peng Yun, and Prof.Jin Wu. I was the research associate in the Intelligent and Autonomous Driving Center (IADC) from 2022 to 2023, and senior research fellow in University College London (UCL) from 2024 to 2025.

More details regarding my previous/ongoing projects can be found on Research Projects. Please feel free to contact me (jiaojh1994 at gmail dot com) if you have questions about our projects and want collaboration.

News

| Jan 31, 2026 | Two papers are accepted to ICRA 2026. Topics cover Legged Robot Navigation-Object Following, and Efficient Vision-Language-Action (VLA) Model. Congratulation to Qianyi Zhang (NKU Ph.D.) and Cheng Yang (Rutgers University Ph.D.)! See you in Vienna! |

|---|---|

| Jan 30, 2026 | Invited by Prof. Junfeng Wu at the Chinese University of Hong Kong, Shenzhen (CUHK-SZ) to present a talk. We had great interaction discussing marine robot navigation and look forward to future collaboration! |

| Dec 20, 2025 | Serving as the Associate Editor for IEEE Robotics and Automation Letters (RAL), overseeing the Localization and Mapping session. |

| Dec 4, 2025 | Invited by Prof. Martin Magnusson at the Orebro University in Sweden to present a talk. |

| Nov 29, 2025 | Invited by Prof. Xieyuanli Chen at the NUDT to present a talk. |

| Nov 21, 2025 | Invited by Prof. Jieqi Shi at the Nanjing University to present a talk in the Distributed AI (DAI) Conference - Embodied Intelligence Workshop. |

| Nov 7, 2025 | Invited by Prof. Chao Chen at the University of Manchester to present a talk. |

| Oct 1, 2025 | OpenNavMap received the Best Paper Award at the IROS 2025 Workshop: Open World Navigation in Human-centric Environments! |

| Sep 24, 2025 | Invited by Prof. Maurice Fallon at the University of Oxford to present a talk on our recent research advancements. It was a great opportunity to share our work and engage with his group. |

| Sep 20, 2025 | One paper is accepted to ACM SenSys. This paper proposes a Novel Robotic Platform and Datasets for Testing the Starlink Satellite Communication under Movement. Congratulation to Mr.Boyi Liu (HKUST Ph.D.)! |

Featured Publications

-

-

LiteVLoc: Map-Lite Visual Localization for Image Goal Navigation

LiteVLoc: Map-Lite Visual Localization for Image Goal NavigationThis paper introduces LiteVLoc, a hierarchical visual localization framework using lightweight topometric maps for efficient, precise camera pose estimation, validated through experiments in simulated and real-world scenarios.

International Conference on Robotics and Automation (ICRA), 2025 -

FusionPortableV2: A Unified Multi-Sensor Dataset for Generalized SLAM Across Diverse Platforms and Scalable Environments

FusionPortableV2: A Unified Multi-Sensor Dataset for Generalized SLAM Across Diverse Platforms and Scalable EnvironmentsWe propose a multi-sensor dataset that addresses the generalization challenge of SLAM algorithms by providing diverse sensor data, motion patterns, and environmental scenarios across 27 sequences from four platforms, totaling 38.7km. The dataset, which includes GT trajectories and RGB point cloud maps, is used to evaluate SOTA SLAM algorithms and explore its potential in other perception tasks, demonstrating its broad applicability in advancing robotic research.

International Journal of Robotics Research (IJRR), 2024 -

Real-Time Metric-Semantic Mapping for Autonomous Navigation in Outdoor Environments

Real-Time Metric-Semantic Mapping for Autonomous Navigation in Outdoor EnvironmentsWe proposed an online and large-scale semantic mapping system that uses LiDAR-Visual-Inertial sensing to create a real-time global mesh map of outdoor environments, achieving high-speed map update and integrating the map into a real-world vehicle navigation system.

IEEE Transactions on Automation Science and Engineering (T-ASE), 2024 -

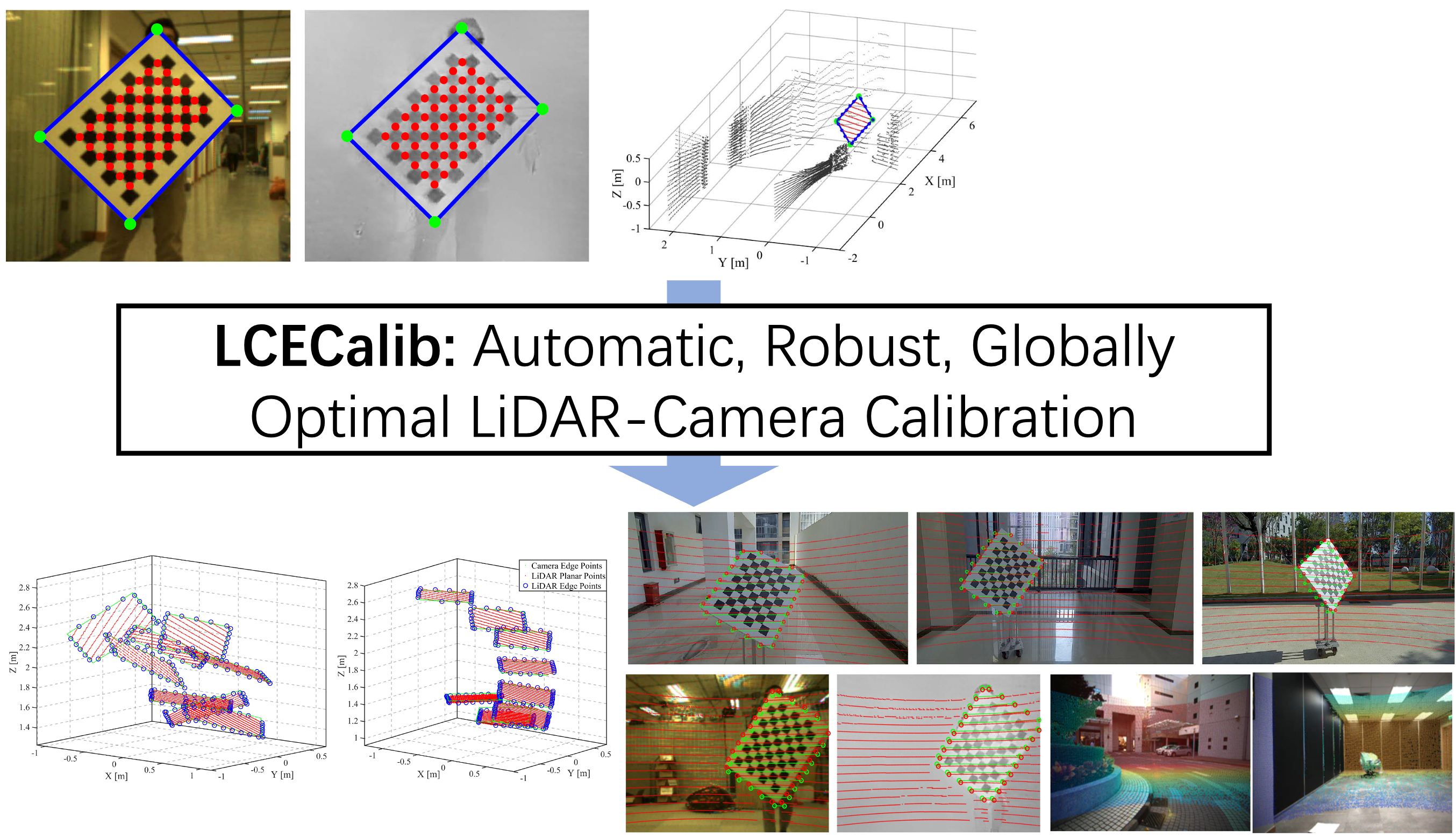

LCE-Calib: Automatic LiDAR-Frame/Event Camera Extrinsic Calibration With a Globally Optimal Solution

LCE-Calib: Automatic LiDAR-Frame/Event Camera Extrinsic Calibration With a Globally Optimal SolutionWe proposed an automatic checkerboard-based approach for calibrating extrinsics between a LiDAR and a frame/event camera by introducing a unified globally optimal solution for calibration optimization.

IEEE/ASME Transactions on Mechatronics (T-MECH), 2023 -

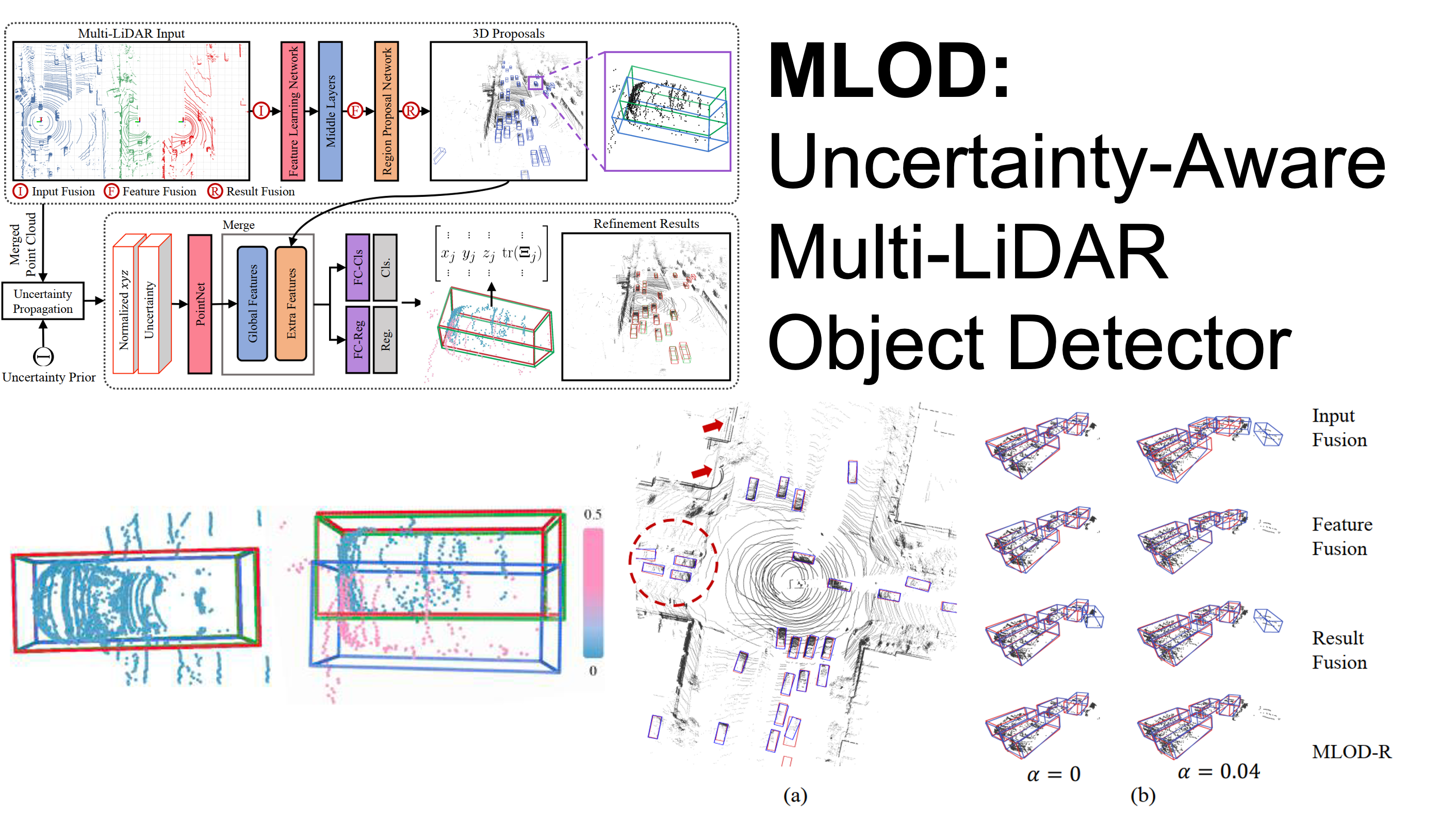

MLOD: Awareness of Extrinsic Perturbation in Multi-LiDAR 3D Object Detection for Autonomous Driving

MLOD: Awareness of Extrinsic Perturbation in Multi-LiDAR 3D Object Detection for Autonomous DrivingWe proposed a two-stage and uncertainty-aware multi-LiDAR 3D object detection system that fuses multi-LiDAR data and explicitly addresses extrinsic perturbation on extrinsics.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020 -

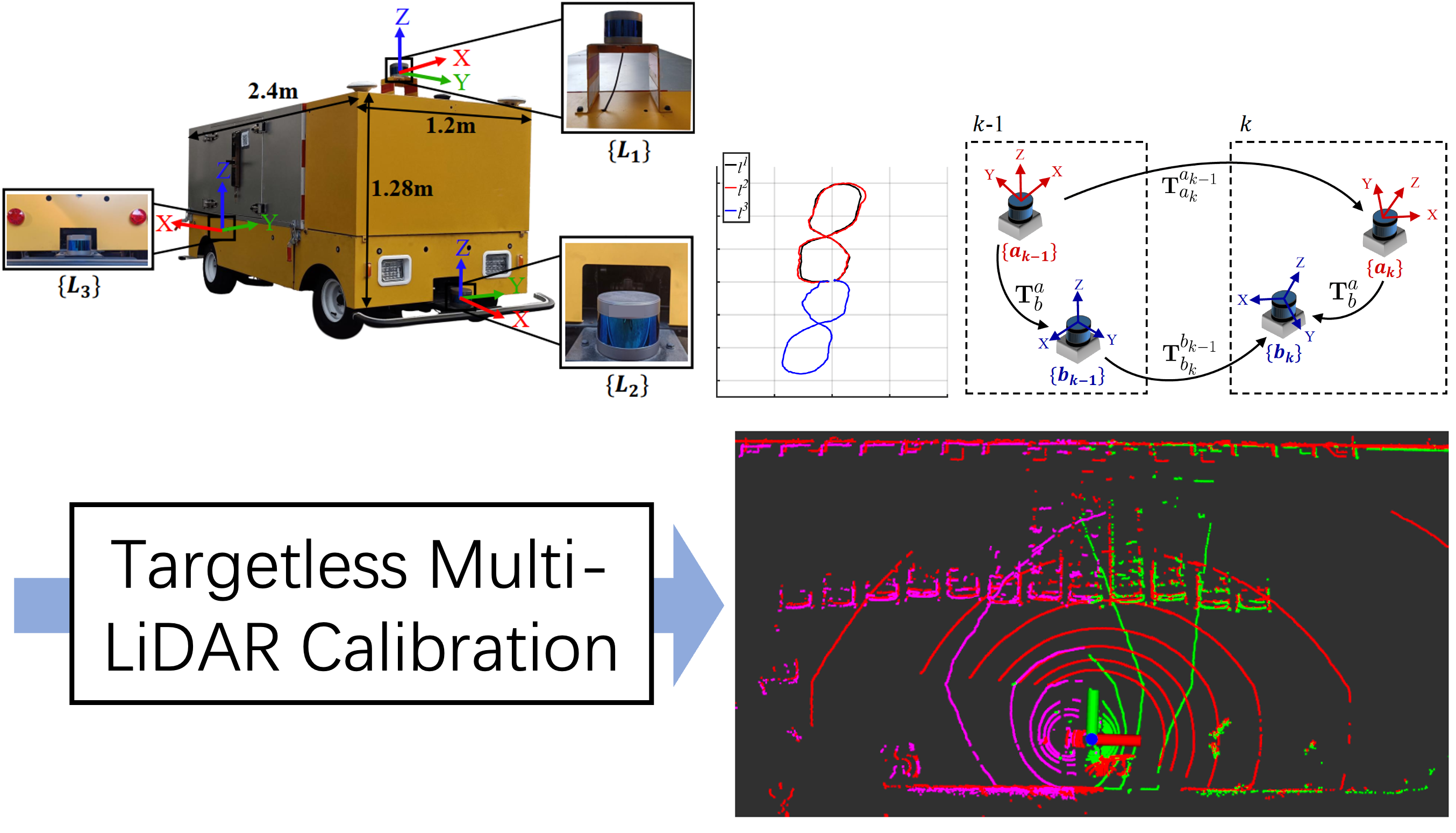

Automatic Calibration of Multiple 3D LiDARs in Outdoor Environment

Automatic Calibration of Multiple 3D LiDARs in Outdoor EnvironmentWe proposed an automatic multi-LiDAR calibration system that requires no calibration target or manual initialization, achieving high reliability and accuracy with minimal rotation and translation errors for mobile platforms.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019